Introduction

- Artificial Intelligence: The capability of machines to imitate intelligent human behaviour.

- Machine Learning: A subset of AI where systems learn and improve from experience automatically.

The financial services sector is rapidly embracing Artificial Intelligence (AI) to enhance efficiency, decision-making, and customer experience through the use of Machine Learning (ML), a key subset of AI. However, this technological evolution brings with it complex cyber security challenges. Navigating these challenges is crucial for protecting sensitive financial data and maintaining consumer trust in an increasingly digital financial landscape. According to a white paper by The Economist Intelligence Unit, banks and insurance companies are expected to see an 86% increase in AI-related investments by 2025, underscoring the growing importance of these technologies in the sector.1

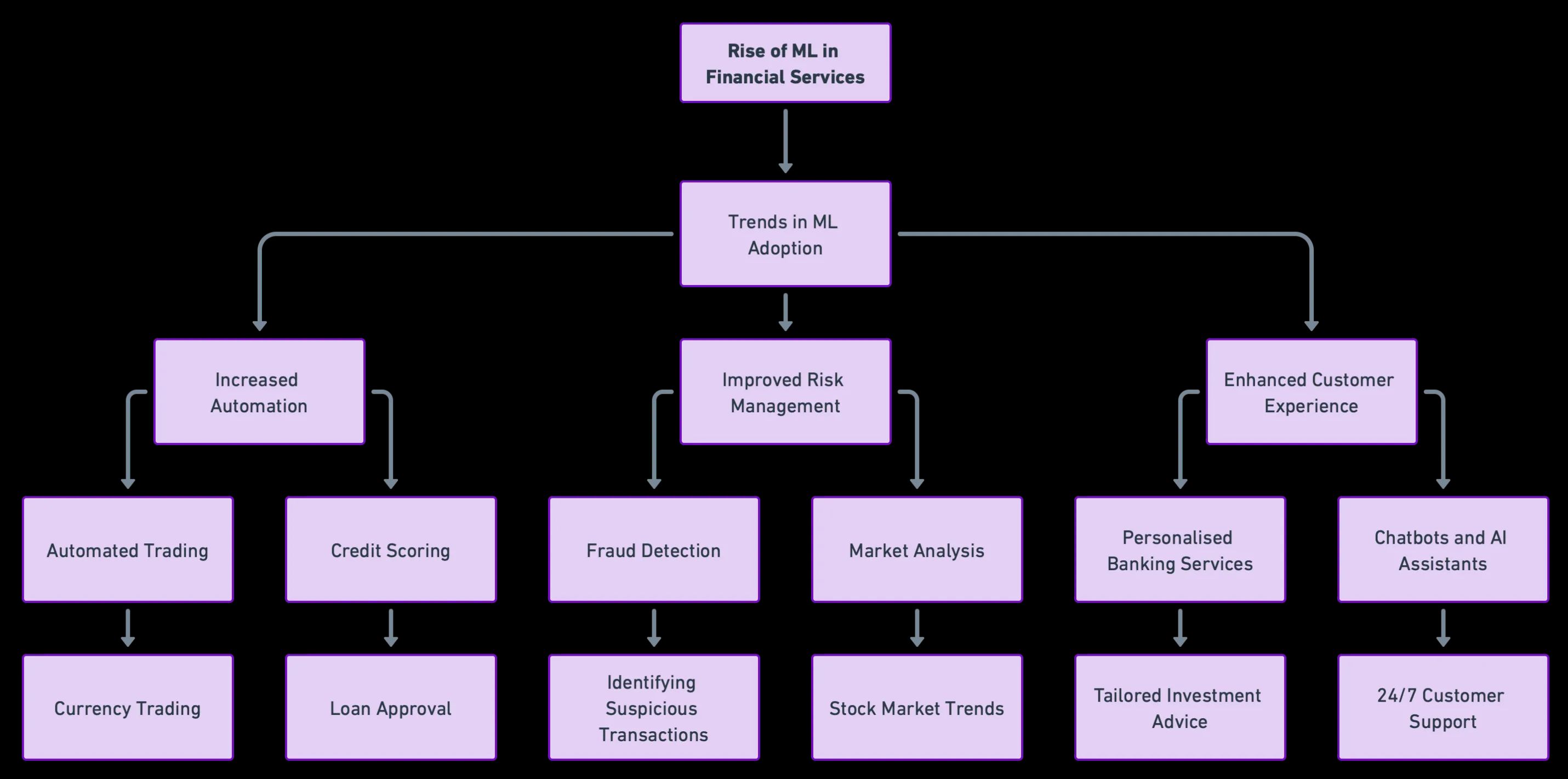

The Rise of ML in Finance

Machine Learning is transforming the financial services industry, offering innovative solutions for complex problems. Applications range from algorithmic trading, where ML algorithms analyse vast amounts of market data to make informed trading decisions, to customer service, where AI-driven tools are reshaping client interactions. Risk management and fraud detection are other critical areas where ML is making a significant impact, predicting loan defaults and identifying fraudulent transactions with unprecedented accuracy.

Case Study: Mastercard’s Use of AI in Real-Time Payment Scams

A prime example of ML’s application in enhancing cyber security in financial services is Mastercard’s initiative to fight real-time payment scams. Utilising its AI capabilities, Mastercard has developed systems that analyse transaction data in real time, enabling the detection and prevention of fraudulent activities as they occur. This proactive approach demonstrates the potential of ML in safeguarding financial transactions and customer data.2

But with these advancements, the need for robust cyber security measures becomes more pressing. The use of ML in handling sensitive financial and personal data raises concerns about data privacy, model vulnerability, and regulatory compliance.

Cyber Security Risks

The integration of AI in financial services introduces distinct cyber security challenges, central to which is the issue of data privacy and protection. ML models necessitate access to substantial volumes of data, often encompassing sensitive personal and financial information. This reliance raises critical concerns around data privacy, with the need to secure this data against potential breaches being paramount for financial institutions. Moreover, regulatory compliance, such as adherence to GDPR and other financial regulations, becomes a complex task, demanding vigilant data management and ethical model construction.

Model vulnerability represents another significant risk. ML models, especially those pivotal in decision-making, are prone to various manipulative attacks, including adversarial attacks and model poisoning. Such vulnerabilities not only threaten the integrity of the financial decisions made but also expose institutions to the risks of financial losses and reputational harm.

Furthermore, the ‘black box’ nature of certain ML models poses substantial challenges in maintaining algorithmic transparency. This opacity can impede regulatory compliance efforts and erode customer trust. Financial institutions must therefore strive towards implementing explainable AI (XAI) frameworks, enhancing the interpretability and accountability of their ML-driven decisions. This move towards transparency is not just a regulatory requirement, but a critical factor in sustaining customer confidence and trust in the AI-driven financial services landscape.

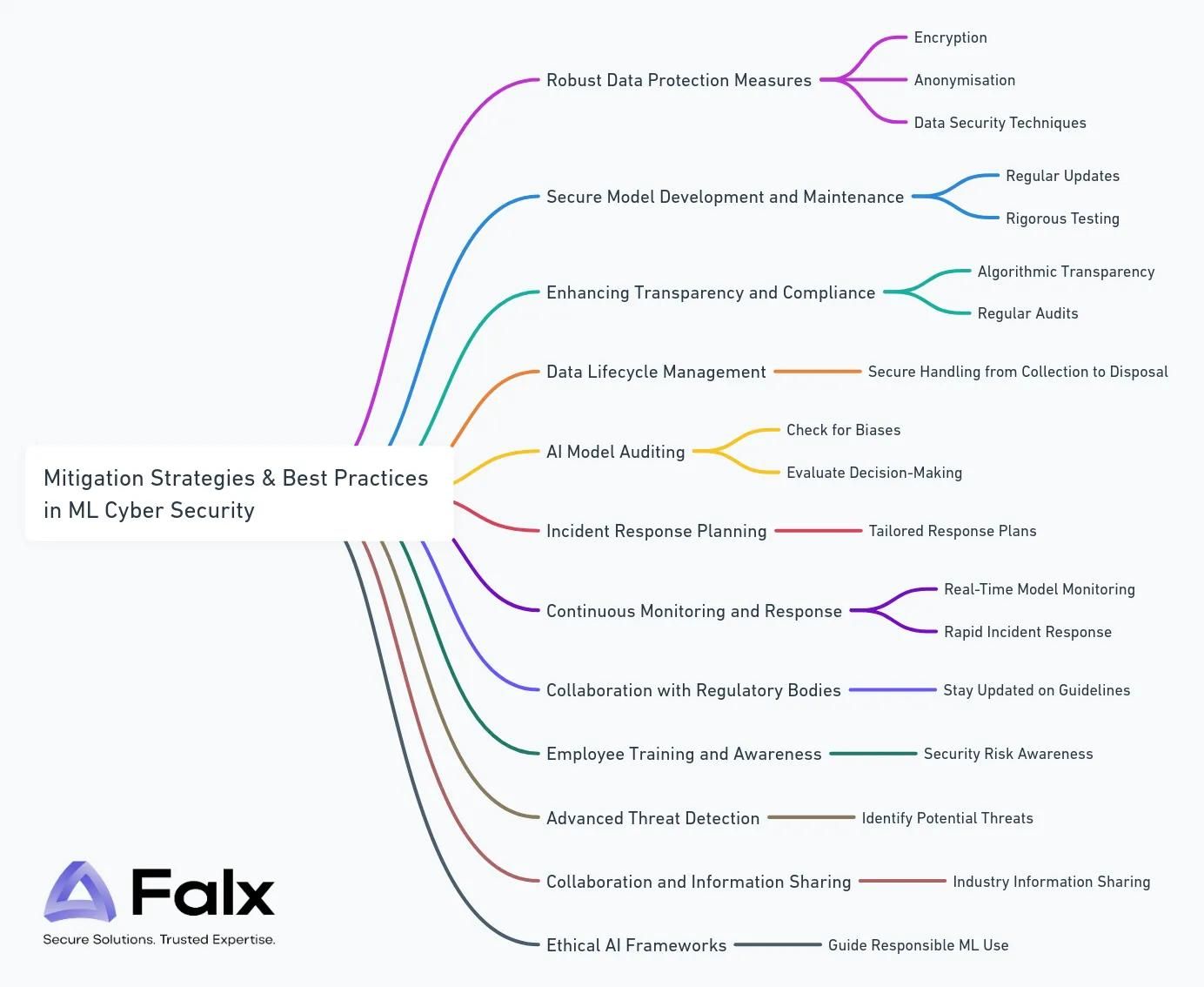

Mitigation Strategies

To address these challenges, financial institutions must implement comprehensive cyber security strategies that include:

Robust Data Protection Measures:

- Implement advanced encryption standards like AES-256 for data at rest and TLS 1.3 for data in transit.

- Utilise data tokenization and masking techniques, especially for personally identifiable information (PII) and financial data.

- Apply strict access controls and authentication protocols, such as multi-factor authentication, to limit data access to authorised personnel only.

Secure Model Development and Maintenance:

- Employ DevSecOps practices to integrate security into the ML development lifecycle.

- Use version control systems for ML models to track changes and roll back to secure versions if needed.

- Implement automated vulnerability scanning and static code analysis to identify and fix security weaknesses in ML algorithms.

Data Lifecycle Management:

- Implement data governance frameworks that define policies for data collection, storage, use, and disposal.

- Automate data lifecycle management using tools that enforce data retention policies and secure data deletion practices.

- Use data classification and inventory solutions to keep track of sensitive data throughout its lifecycle.

AI Model Auditing:

- Conduct bias and fairness audits using tools and methodologies that assess ML models for discriminatory patterns.

- Perform regular performance evaluations to ensure models maintain accuracy and reliability over time.

- Utilise third-party auditing services for independent assessment of ML models.

Incident Response Planning:

- Develop ML-specific incident response protocols, considering scenarios like data breaches, adversarial attacks, and model tampering.

- Train incident response teams in identifying and responding to ML-related security incidents.

- Conduct regular simulated breach exercises to test and refine response strategies.

Secure Deployment of ML Models:

- Ensure secure deployment environments, whether on-premises or in the cloud, with hardened security configurations.

- Use containerisation (e.g., Docker) and orchestration tools (e.g., Kubernetes) to create isolated and controlled deployment environments.

- Implement robust access control and authentication for APIs exposing ML models to prevent unauthorised access.

Safe Inference and Model Serving:

- Protect inference data streams to ensure confidentiality and integrity of the data being processed.

- Monitor model serving endpoints for signs of tampering or unusual access patterns.

- Use rate limiting and encryption to secure API endpoints where models are served.

Handling Model Drift and Retraining:

- Monitor deployed models for performance degradation and model drift, where the model’s predictions become less accurate over time.

- Establish protocols for retraining models with new data in a secure and privacy-compliant manner.

- Implement automated triggers for model retraining and validation, ensuring that models remain accurate and relevant.

Secure Model Decommissioning:

- Develop processes for safely decommissioning and retiring models that are no longer in use.

- Ensure that all associated data and resources are securely wiped or transferred, following data retention policies.

- Document and audit the decommissioning process to maintain a record of actions taken for security and compliance purposes.

Best Practices

Enhancing Transparency and Compliance:

- Adopt explainable AI (XAI) frameworks to improve the interpretability of ML decisions, addressing the ‘black box’ issue.

- Regularly review ML models for compliance with GDPR, PCI-DSS, and other relevant financial and data protection regulations.

- Conduct privacy impact assessments (PIAs) to evaluate how ML models process and affect personal data.

Continuous Monitoring and Response:

- Deploy real-time monitoring tools like SIEM (Security Information and Event Management) systems with tailored use cases for ML environments.

- Integrate anomaly detection systems to flag unusual model outputs or data patterns.

Collaboration with Regulatory Bodies:

- Engage in regular dialogues with regulatory authorities to stay updated on emerging ML security standards and guidelines.

- Participate in industry consortia focused on developing best practices for ML in financial services.

Employee Training and Awareness:

- Implement specialised training programmes for developers and data scientists on secure ML practices.

- Conduct regular cyber security awareness training for all employees, emphasising the risks associated with ML systems.

Advanced Threat Detection:

- Leverage threat intelligence platforms to predict and identify emerging cyber security threats.

- Use behavioural analytics to detect insider threats and compromised user credentials in ML environments.

Collaboration and Information Sharing:

- Join industry-specific cyber security information sharing and analysis centres (ISACs) for collaborative threat intelligence.

- Share and receive anonymised cyber security incident data with peer institutions to improve collective defence strategies.

Ethical AI Frameworks:

- Develop internal ethical guidelines for the use of AI and ML, focusing on accountability, fairness, and transparency.

- Appoint an ethics board or committee to oversee the ethical deployment of ML technologies.

Conclusion

The integration of Artificial Intelligence and Machine Learning into the financial services sector represents a significant advancement in technological capabilities, driving innovation and efficiency. However, it also introduces complex cyber security challenges. As financial institutions continue to adopt these technologies, the necessity of implementing robust cyber security measures cannot be overstated. It’s crucial to protect sensitive data, but also to maintain the integrity and trustworthiness of these advanced systems. Ensuring compliance, safeguarding against vulnerabilities, and maintaining transparency are not just regulatory requirements, but are essential for sustaining customer confidence.

At Falx, we understand the intricacies and challenges presented by AI and ML in cyber security. We are committed to helping you navigate these challenges effectively.

Reach out to us for a discussion, and let’s collaborate to strengthen the security and integrity of your Machine Learning or Artificial Intelligence initiatives.